Graph Ranking - part 2

Posted on 22/06/2021 by Roberto Cornacchia

In the first part of this blog mini-serie about graph ranking we introduced a well-known domain and some simple ways of defining relevance and ranking over graph nodes within Spinque Desk.

This second post is about ranking graph nodes using their text properties. While exploring different approaches to multi-property raking, we introduce the concept of result combination.

Ranking movies by title

Ranking by text (often referred to as full-text ranking) is a common and powerful tool in the hands of search engines.

Most popular retrieval models for full-text ranking work by comparing query keywords to a statistical model of the text extracted from the objects to search. Such objects are flat text documents in classical text retrieval, and Movie nodes in our graph. This comparison produces a probability of relevance to the query keywords for each of the objects considered, which finally allows us to rank them.

Terminology

In text ranking, it is common to encounter the following terms with similar meaning:

- 'term': indicates a text unit (often but not necessarily a word) and can be used in several contexts.

- 'keyword': a term that is used for a specific purpose, normally as part of a query.

- 'token': the result of a text pre-processing phase that splits up the original text into smaller units. Often equivalent to a single word, but it can also refer to an n-gram or an entire phrase.

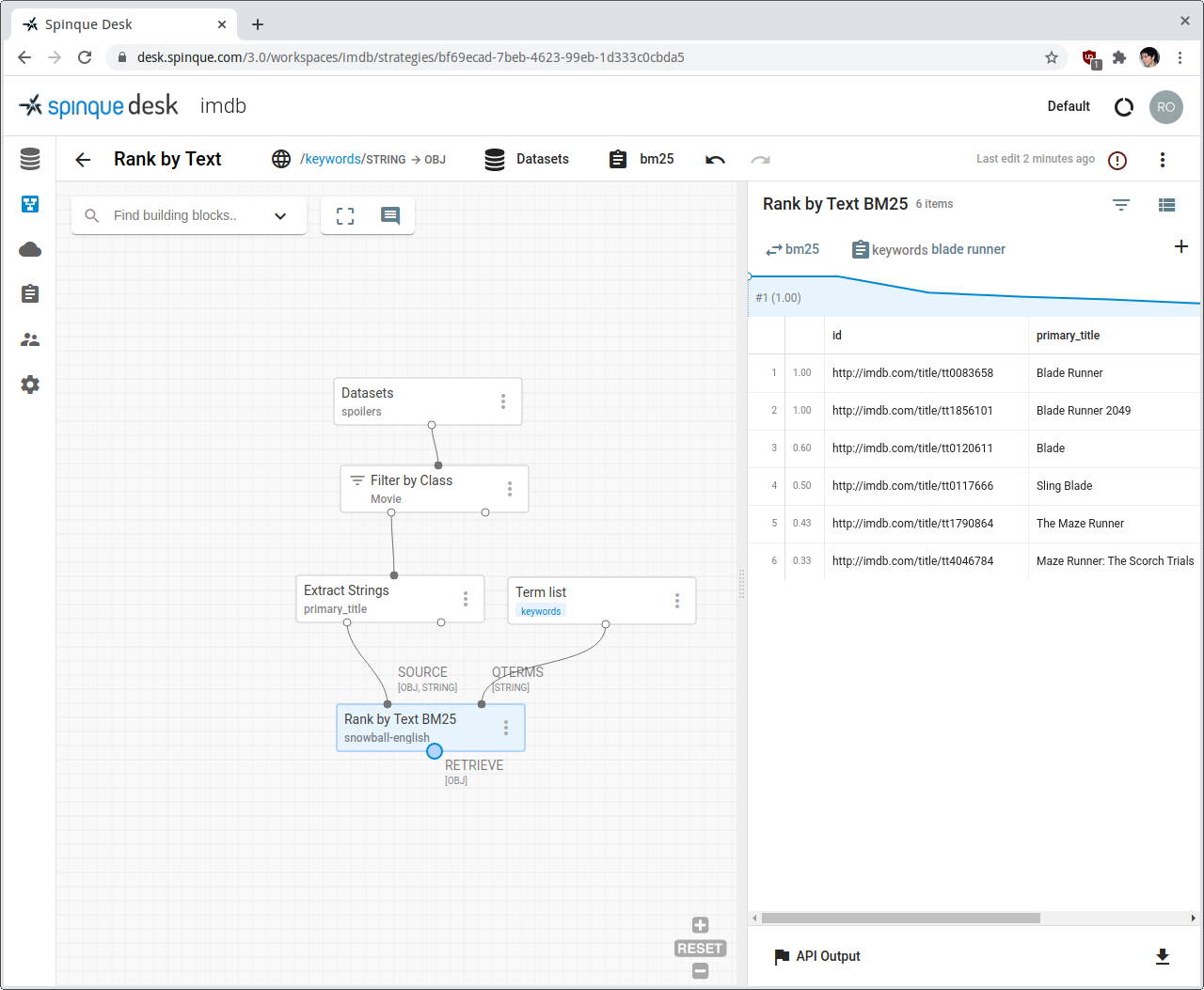

In this example we use block Rank by Text BM25, which implements the state-of-the-art retrieval model Okapi BM25.

The Rank by Text block, which we see in the screenshot above, outputs ranked nodes and has two inputs:

- SOURCE [OBJ,STRING]: This input identifies pairs of nodes to search (all nodes have internal type OBJ) and the text (type STRING) that is to be considered by the search algorithm.

- QTERMS [STRING]: The query terms (a.k.a. query keywords)

Our intent is to rank movies by how well their title matches the query keywords. Therefore, the SOURCE input should be composed by [ Movie, Title ] pairs.

We use block Extract Strings for this, which makes the selected string property of a node explicit as a second column next to the node itself.

Why do we perform this text extraction explicitly and pass [OBJ,STRING] in input to the SOURCE connector, rather than passing [OBJ] in input (just the movies) and letting the rank block use the desired text property (the title)? As shown in the next section, this choice allows for greater flexibility in more complex scenarios.

Block Term list provides input for the QTERMS [STRING] connector of the rank block. Similarly to what we did in the previous post when ranking by ratings, we instantiate the keywords with the value of a query parameter keywords, and select from a testset to simulate user input ('blade runner' in the screenshot).

For the daring reader

Block Rank by Text BM25 offers a number of parameters to influence the ranking results, that we can divide in 3 parts:

- Input pre-processing. Before both node input text and query text are used in the retrieval model, they are pre-processed and normalised in several ways:

- Tokenisation: all text is split up into tokens (loosely equivalent to words) for the retrieval model to work on. These parameters control how exactly tokens are identified.

- Stemming: if a language-specific stemming method is selected, only the stem of each token is used. This allows to consider words with same stem and different inflections as equal (e.g. 'ranking' and 'ranked' share the stem 'rank'), trading precision for flexibility.

- Normalisations: currently include ignoring text case, removing diacritics and transliterating non-ASCII characters (e.g. 'Höß' to 'hoss').

- Model-specific parameters.

- Parameter k1 (saturation): normally, multiple occurrences of a term within a text indicate relevance of that text to the term. The lower the value of k1, the quicker the number of term occurrences loses importance.

- Parameter b (length normalisation): the lower the value, the more each text's fragment length influences the result (typically, short fragments get higher scores)

- Parameter All query terms must match: if checked, an 'AND' logic between query terms is assumed. If unchecked, an 'OR' logic is assumed.

- Output parameters.

- Parameter Score Normalisation can be used to normalise the final scores produced by the BM25 algorithm, which can be greater than 1, so that they can be interpreted as probabilities in the remainder of the strategy. The default choice, MAX, divides all scores by the maximum score.

Ranking across multiple properties

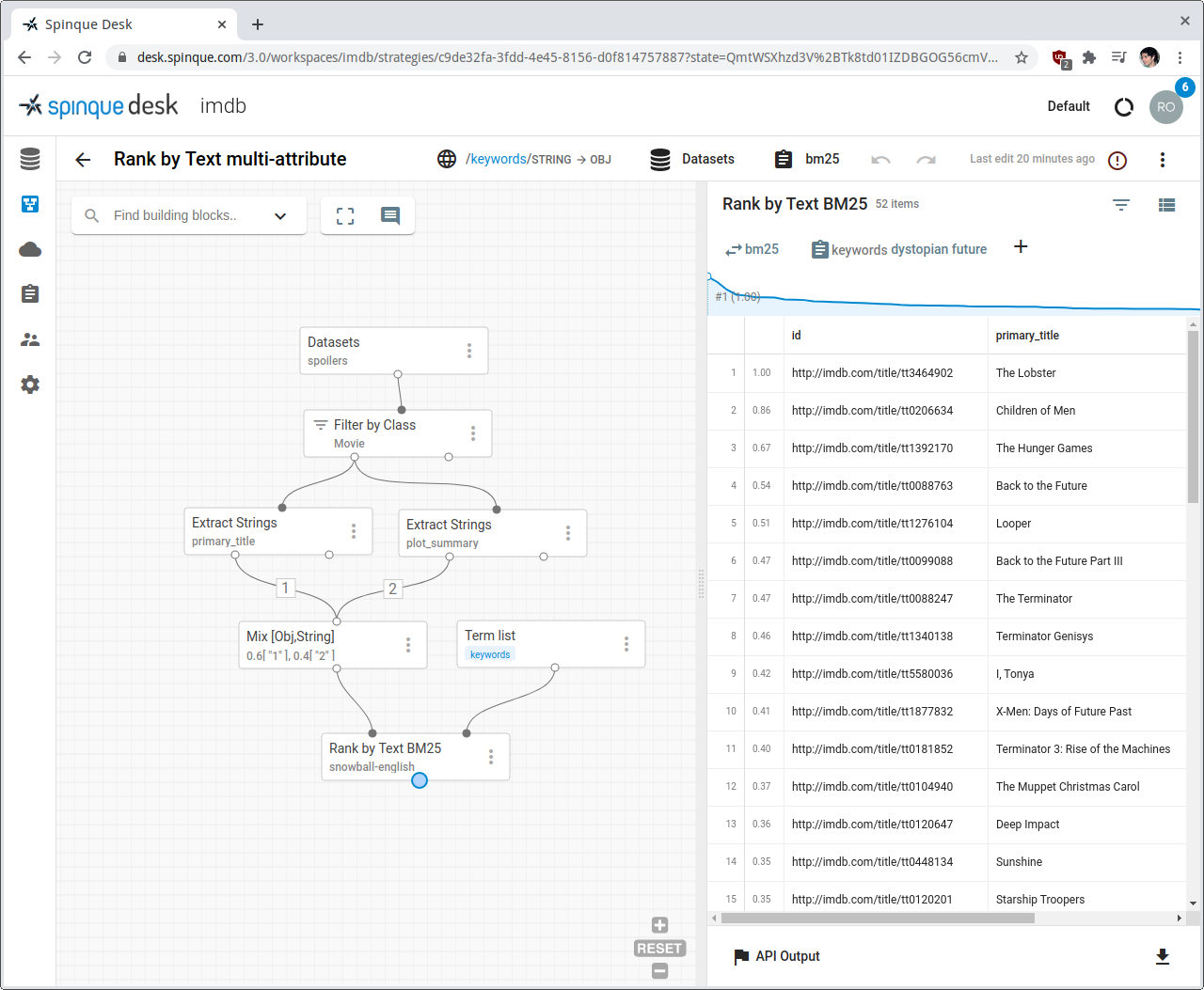

Suppose we want to find movies about a story that takes place in a dystopian future. Using the previous strategy to rank movies by title with keywords 'dystopian future' won't return much more than the Back to the Future trilogy. Though this would be a result with good precision, it would miss the very many other movies about a dystopian future, resulting in very low recall.

Movie titles are usually catchy phrases that reveal very little about what the movie is about. They are so short and specific that text ranking struggles to express its power and almost falls back to boolean word match.

A plot summary contains more elaborate text that we can expect to be more suited for this type of search. At the same time, we don't want to stop matching some titles with precision.

Mixing properties

One possible approach to consider both titles and plot summaries for our text search is to extract both, union them, and feed the resulting bags of words (one bag per movie) to the rank block.

The Mix block performs the union of any number of type-compatible (in this case [OBJ,STRING]) streams. It also allows to set a weight to each input stream. In the screenshot, we have set weights 0.6 and 0.4 to title and plot summary, respectively.

Notice that once mixed, all the terms contained in titles and plot summary of each movie end up in the same bag-of-words (though with possibly different weights). That is, it will no longer be possible to distinguish which terms came from the title and which from the plot summary. In a way, we are creating a new artificial property that combines the two original ones.

For the daring reader

This scenario shows why the choice of passing [OBJ,STRING] in input to the block Rank by Text BM25 is more flexible than passing [OBJ] and selecting one property as the source of the text to consider. It allows us to first construct bags-of-words as we please.

This is a special case that is in contrast with blocks Rank By Integer, and Rank By Double Rank By Date, which accept [OBJ] in input. There is no need to extract those numbers and dates explicitly, as the ranking algorithms implemented by such blocks wouldn't be able to make sense of values coming from multiple properties.

Mixing rankings

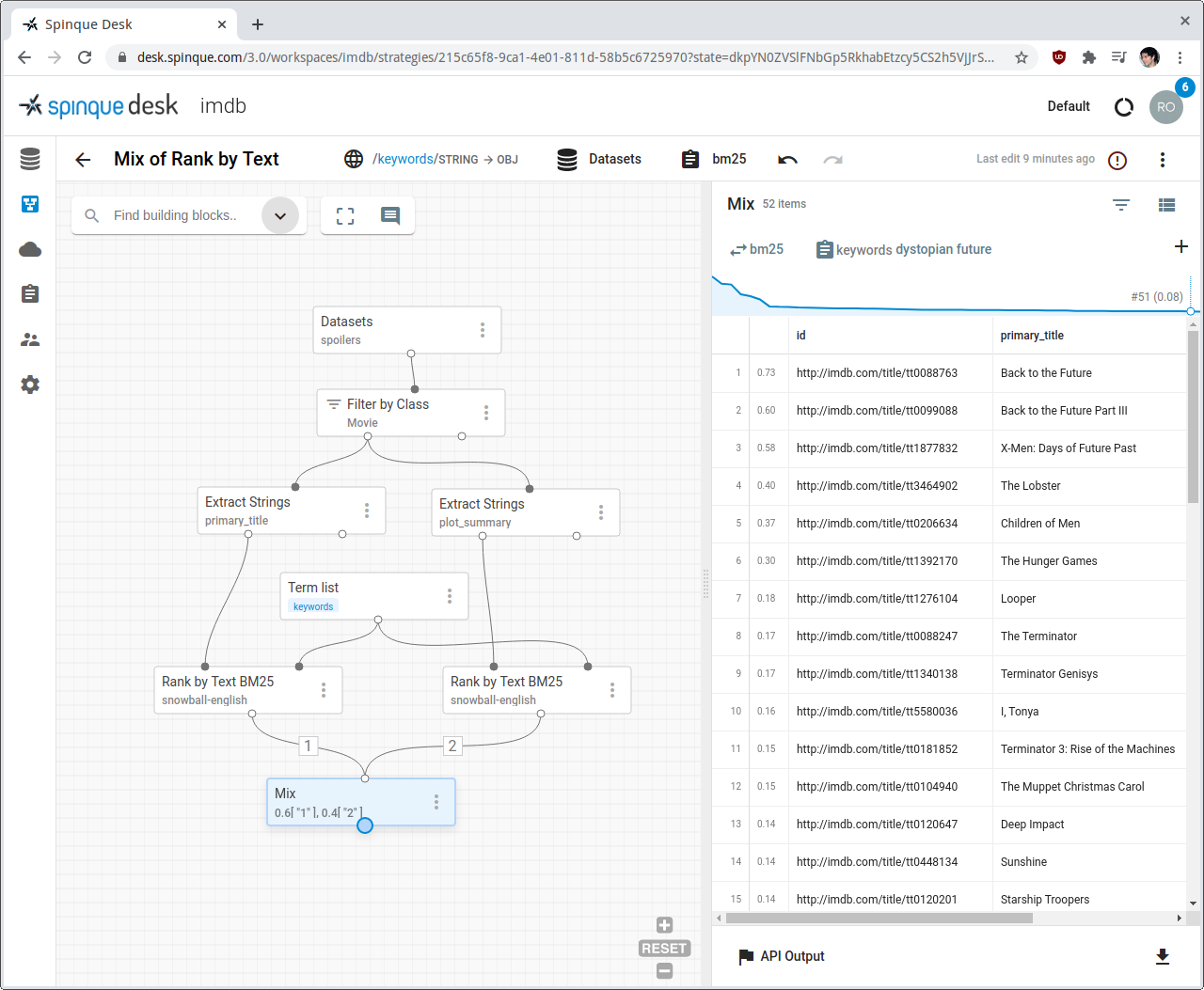

We have just seen a first approach for ranking movies by both title and plot summary: the text from the two properties is merged into one bag.

A second approach merges the results of two independent searches, performed on each property.

The rankings obtained with the two approaches are similar but not identical, as they use slightly different statistics and combine them at different stages.

Notice that ranking independently on the two properties is a more general approach. It allows us to merge rankings obtained by any combination of properties and property types. It also allows us to choose different parameters in their respective search blocks. For example, titles tend to favour more precise matches to keywords (case-sensitive, full tokenisation, no length normalisation), while plot summary may be better searched with more relaxed parameters (case-insensitive, tokens with no digits or punctuation, standard document-length normalisation).

Which approach should one use? It depends on the task at hand, but a good rule of thumb can be: retrieval models tend to give best results when not dealing with apples and pears in the same bag.

Are the properties to search on similar in nature? Do they have similar length, language, purpose (e.g. a plot summary and a review)? Then mix them in one bag-of-words before ranking, possibly assigning different weights.

In all other cases, it is probably best to treat the ranking of different properties as different ranking branches to be merged at the end, thus choosing the second approach.

The next and last part of this mini-series will explore a ranking approach that exploits the very nature and structure of graphs, and it will finally put together all we have learned so far. Don't forget to check it out!