Linked Open Images

Posted on 03/07/2015 by Michiel Hildebrand

In a previous blog post, we introduced CultuurLINK as a service for the cultural heritage community to integrate collections by linking their controlled vocabularies. As an example we showed how to connect the internal subject term list from the NIOD Institute for War, Holocaust and Genocide Studies with a large audio visual thesaurus (GTAA) from the Netherlands Institute for Sound and Vision.

Today, we put these newly created links into action. Linked Open Images is a mobile application to explore historic material about World War II that gives access to three Open Data collections: historic (1) Dutch news reels shared by Sound and Vision and (2) Photographs and (3) Books shared by NIOD.

The front-end builds upon CWI's enriched video experiences, an application developed in LinkedTV (a recently completed EU research project). The user watching a video can, at any time, pull up a panel to explore related information. Within Comsode we extended the application to operate on mobile phones, tailored the design to the use case and integrated it with Spinque. The exploratory mode in our use case extends the experience of viewing Sound and Vision's historic news reels with immediate access to the most related NIOD books and photographs. In addition, the application suggests other news reels about the same topic, for the user to watch next.

The entire backend of the application is created by modeling Spinque strategies, just like we described in the older blog post Building an Open Data application with Spinque. In this case, we modeled different strategies to (1) search for videos, (2) find related photographs and books, and (3) suggest related videos.

In other words we tailored the generic Linked Video application to this use case by modeling the search strategies. To create a similar user experience for different collections the only prerequisite is to model the search; a novel workflow we call search by strategy!

Even though the collections used in the application are available as Open Data and accessible through the OAI-PMH harvesting protocol, we still need to prepare the data for the application. Using the Comsode Open Data Node, we harvested the data, cleaned it, enriched it and linked it. We now introduce the application and Spinque's search strategies over integrated data. Linking controlled vocabularies with CultuurLINK has been described last week in our blog post, and the details of the harvesting, cleaning and enriching will be given in a later post.

Frontend: mobile application

View historic news reels on your mobile device:

Screenshot Linked Open Images: list of videos matching the query 'bevrijding amsterdam'

A list of news reels is shown upon opening the application. The user can also search for a specific video with the search bar. The screenshot above shows how the user searches for videos of the liberation of Amsterdam ('bevrijding' in Dutch).

Screenshot Linked Open Images: video player showing a Dutch news real about the liberation of Amsterdam

When the user selects a video, the application switches to the player. The screenshot above shows the video player with the video about the liberation of Amsterdam. The video player provides the default functionality (pausing/playing, timebar with seeking functionality, volume control) and in addition contains a button to enable the 'explore panel' (situated top right).

Screenshot Linked Open Images: explore panel showing photograph related to the liberation of Amsterdam

This 'explore panel' contains three tabs to group different types of related information. The first tab contains related photographs retrieved the NIOD collection, that the user may swipe through. The screenshot above, for example, shows a photograph of a crowd gathering at the Dam Square in Amsterdam right after the liberation. By clicking the info button on one of the photographs, additional information about the photograph is revealed.

Screenshot Linked Open Images: third tab showing related videos

Similarly, the second tab contains references to books from the NIOD library. The third and final tab contains related videos, retrieved from the news reel collection. In the screenshot above, the first suggestion is a video of the liberation speech given by the mayor of Amsterdam. Selecting one of the suggested items from this tab loads this video into the player. Of course, while viewing this new video, the user may again open the explore panel and bring up related information to this footage, and so on.

Backend: Spinque strategies

The creation of the application's backend required no programming whatsoever! All this search-based functionality is readily provided by modelling six different Spinque strategies. The start screen is backed by two search strategies, a first to get the list of initial videos (news reels) and another one to search for videos. The video player uses a third strategy to fetch detailed information about a video, such as the stream URL and the duration of the video. The explore panel is supported by three more strategies that express recommendations, which identify the related information shown in each tab, photographs, books and videos.

Let us elaborate on these three recommendation strategies, that follow the recommendation pattern: as input they take the current item (the video), information is extracted from this item, and related items are retrieved using this extracted information. Within this pattern, the three strategies differ from each other as the data sources each contain different types of information.

http://www.openbeelden.nl/120155

rdf:type dcmitype:MovingImage

dc:title 'Bevrijdingsbeelden Amsterdam'

dc:description 'Reportage van de intocht der Canadezen en Britten in de hoofdstad...'

dc:date '8-1-1945'

dc:coverage gtaa:34040, gtaa:31586, ...

dc:subject gtaa:215996, gtaa:217248, ...

dc:publisher: 'Nederlands Instituut voor Beeld en Geluid'

...

http://www.beeldbankwo2.nl/detail_no.jsp?action=detail&imid=110408

rdf:type dcmitype:Image

dc:description 'Een menigte mensen op Dam, met name in georganiseerd verband ...'

dc:coverage gtaa:34040, gtaa:31586, ...

dc:subject niod:Bevrijding, niod:Massabijeenkomsten, ...

...

The strategy to recommend photographs from the NIOD beeldbank given a news reel uses three types of metadata: textual, subject terms and locations. The figure above shows a snippet of news reel metadata from the Netherlands Institute for Sound and Vision, and another snippet of photograph metadata from the NIOD. For the videos, textual metadata is available in the form of a title (dc:title) and a description (dc:description). The subject terms (dc:subject) and the depicted location (dc:coverage) are taken from the audio visual thesaurus of the institute (GTAA). Subject terms include 'liberation', 'parades', 'jeeps', 'street footage', and 'resistance fighters' while locations include 'Amsterdam' and 'Dam square'. For the photographs the textual metadata is only available in the form of a description while locations (dc:coverage) are taken from GTAA. For this example, the locations also include 'Amsterdam' and 'Dam square'. The subject terms (dc:subject) are taken from the NIOD term list, examples including 'liberation' and 'mass parades'. Please refer to the previous post in this series to revisit how we used CultuurLINK to align the NIOD term list with the GTAA.

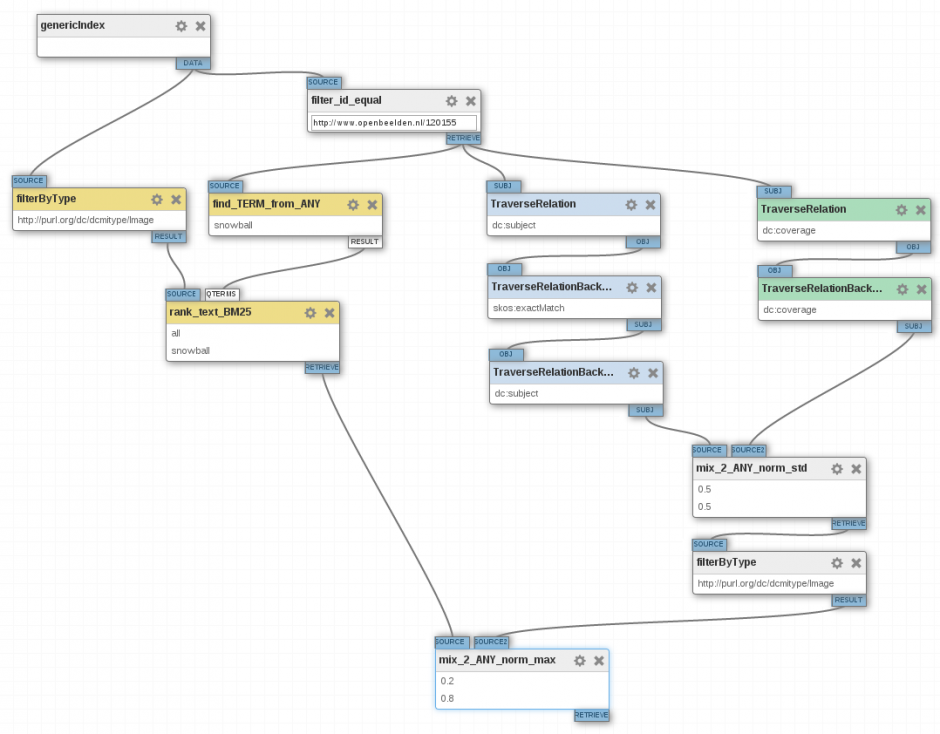

Spinque search strategy to get photographs related to a news reel

The figure above shows the entire strategy for the recommendation of photographs given a video. The strategy starts at the top with a block that holds all data (genericIndex). The next block selects from this data the input video (filter_id_equal). This block is configured as an input block. This means that in the API corresponding to this strategy the value of this block has to be provided as a parameter. In this case the input is provided by the identifier of the video (a URL).

Below the input block the strategy contains the three streams to use the different types of metadata. The blocks for each stream are shown in a different color. The blocks in yellow together find the photographs from which the titles and descriptions are similar to those of the input video. This is achieved by first extracting the terms contained in the title and description of the input video (find_TERM_from_ANY). These terms are then used as a query to find other items. This is done with the block rank_text_BM25. The items that we search for are restricted to photographs by filtering the dataset to items of type Image, using the block filterByType. The output of this stream consists of photographs described by terms similar to the input video. The related photographs are ranked using the BM25 algorithm. Roughly speaking this algorithm prioritizes the role of the important terms in the recommendation.

In the textual stream (yellow blocks) we had to extract terms from the title and descriptions. In the case of subjects (blue blocks) and locations (green blocks), the terms to use have been modeled explicitly as relations to concepts from the GTAA thesaurus or NIOD term list (dc:subject and dc:location). To find photographs related to a video, we simply traverse the relations over these concepts. For the locations, this is straightforward because videos and photographs are both described using GTAA; we traverse the dc:coverage relation from the video to the GTAA concept (e.g. Dam Square) using the block traverseRelation, and we traverse the same relation in the opposite direction to find all other items related to this Concept traverseRelationBackwards. For the subject terms we need one additional block to traverse the link relation between the concept from GTAA and the NIOD term list. This block is configured to traverse the link between the concepts in the form of the skos:exactMatch relation.

The previous blog post together with this one demonstrates how building advanced search applications over heterogeneous collections can be achieved without programming. Data integration was done in CultuurLINK by creating link strategies that align vocabularies. The API powering the Linked Open Images application is created by modeling the search strategies in Spinque. Curious about the technology behind all this? In our next blog post will take a look under the hood, giving you a peak in the upcoming Spinque 2.0.